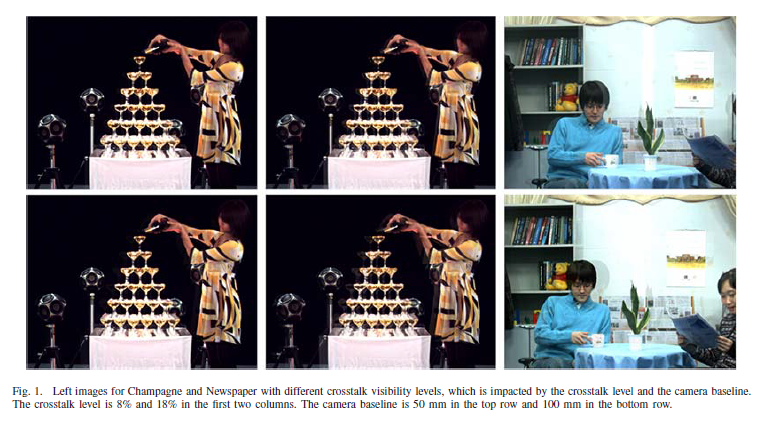

Robust stereoscopic crosstalk prediction using color cues and stereo images

Abstract —We propose a new metric to predict the perceived crosstalk using the original images rather than both the original and ghosted images. The proposed metrics are based on color information. First, we extract a disparity map, a color difference map and a color contrast map from original image pairs. Then we use those maps to construct two new metrics (Vdispc and Vdlogc). Metric Vdispc considers the effect of the disparity map and the color difference map, while Vdlogc addresses the influence of the color contrast map. The prediction performance is evaluated using various types of stereoscopic crosstalk images. By incorporating Vdispc and Vdlogc, the new metric Vpdlc is proposed to achieve a higher correlation with the perceived subject crosstalk scores. Experimental results show that the new metrics achieve better performance than previous methods, which indicates that color information is one key factor for crosstalk visible prediction. Furthermore, we construct a new dataset to evaluate our new metrics.