Joint Estimation of Depth and Motion from Monocular Endoscopy Image Sequence using Multi-loss Rebalancing Network

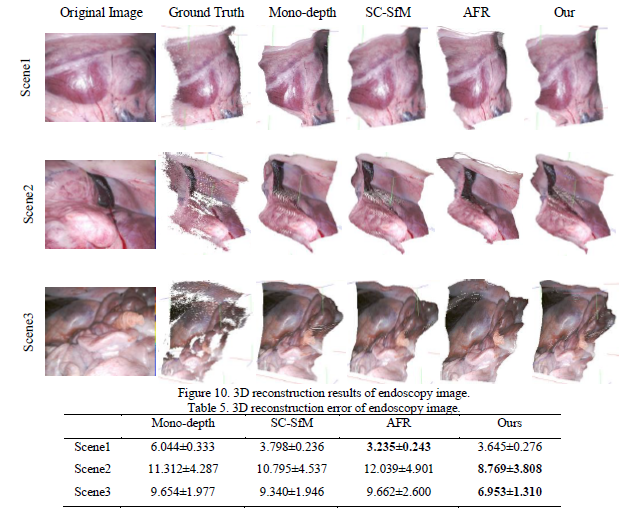

Abstract—Building an in vivo three-dimensional (3D) surface model from a monocular endoscopy is an effective technology to improve the intuitiveness and precision of clinical laparoscopic surgery. This paper proposes a multi-loss rebalancing-based method for joint estimation of depth and motion from a monocular endoscopy image sequence. The feature descriptors are used to provide monitoring signals for the depth estimation network and motion estimation network. The epipolar constraints of the sequence frame is considered in the neighborhood spatial information by depth estimation network to enhance the accuracy of depth estimation. The reprojection information of depth estimation is used to reconstruct the camera motion by motion estimation network with a multi-view relative pose fusion mechanism. The relative response loss, feature consistency loss, and epipolar consistency loss function are defined to improve the robustness and accuracy of the proposed unsupervised learning-based method. Evaluations are implemented on public datasets. The error of motion estimation in three scenes decreased by 42.1%,53.6%, and 50.2%, respectively. And the average error of 3D reconstruction is 6.456 ± 1.798mm. This demonstrates its capability to generate reliable depth estimation and trajectory reconstruction results for endoscopy images and meaningful applications in clinical.