Augmented reality navigation with real-time tracking for facial repair surgery

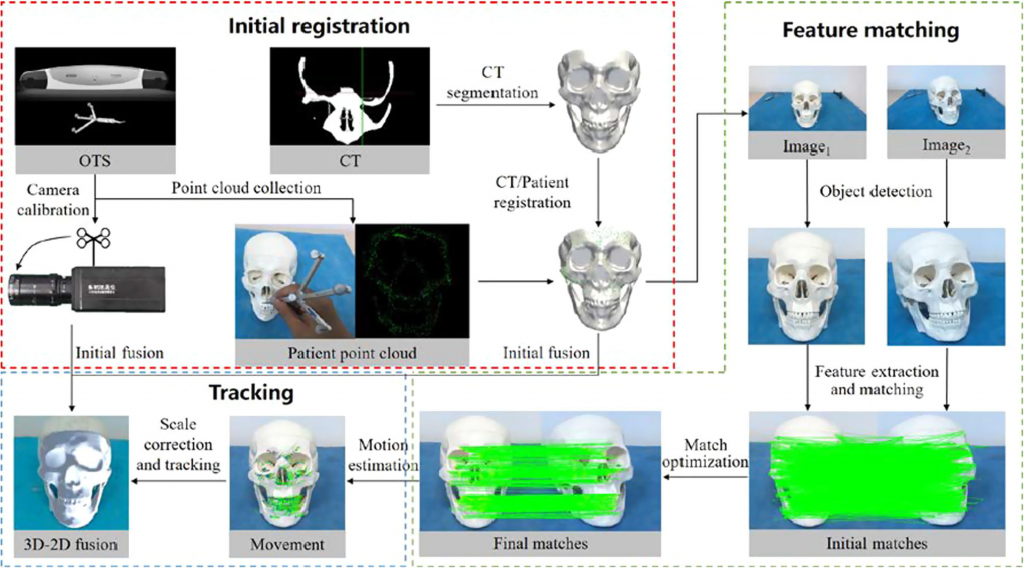

Abstract—Purpose Facial repair surgeries (FRS) require accuracy for navigating the critical anatomy safely and quickly. The purpose of this paper is to develop a method to directly track the position of the patient using video data acquired from the single camera, which can achieve noninvasive, real time, and high positioning accuracy in FRS. Methods Our method first performs camera calibration and registers the surface segmented from computed tomography to the patient. Then, a two-step constraint algorithm, which includes the feature local constraint and the distance standard deviation constraint, is used to find the optimal feature matching pair quickly. Finally, the movements of the camera and the patient decomposed from the image motion matrix are used to track the camera and the patient, respectively. Results The proposed method achieved fusion error RMS of 1.44 ± 0.35, 1.50 ± 0.15, 1.63 ± 0.03 mm in skull phantom,cadaver mandible, and human experiments, respectively. The above errors of the proposed method were lower than those of the optical tracking system-based method. Additionally, the proposed method could process video streams up to 24 frames per second, which can meet the real-time requirements of FRS. Conclusions The proposed method does not rely on tracking markers attached to the patient; it could be executed automatically to maintain the correct augmented reality scene and overcome the decrease in positioning accuracy caused by patientmovement during surgery.