Homography-based robust pose compensation and fusion imaging for augmented reality based endoscopic navigation system

Abstract—Background: Augmented reality (AR) based fusion imaging in endoscopic surgeries rely on the quality of imageto-patient registration and camera calibration, and these two offline steps are usually performed independently to get the target transformation separately. The optimal solution can be obtained under independent conditions but may not be globally optimal. All residual errors will be accumulated and eventually lead to inaccurate AR fusion.

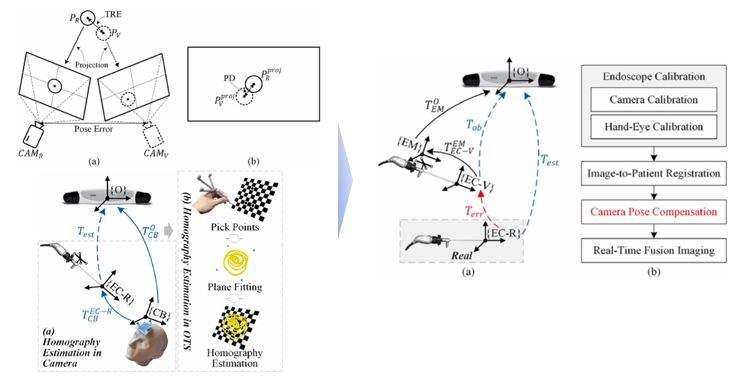

Methods: After a careful analysis of the principle of AR imaging, a robust online calibration framework was proposed for an endoscopic camera to enable accurate AR fusion. A 2D checkerboard-based homography estimation algorithm was proposed to estimate the local pose of the endoscopic camera, and the least square method was used to calculate the compensation matrix in combination with the optical tracking system.

Results: In comparison with conventional methods, the proposed compensation method improved the performance of AR fusion, which reduced physical error by up to 82%, reduced pixel error by up to 83%, and improved target coverage by up to 6%. Experimental results of simulating mechanical noise revealed that the proposed compensation method effectively corrected the fusion errors caused by the rotation of the endoscopic tube without recalibrating the camera. Furthermore, the simulation results revealed the robustness of the proposed compensation method to noises.

Conclusions: Overall, the experiment results proved the effectiveness of the proposed compensation method and online calibration framework, and revealed a considerable potential in clinical practice.